How to Read Graph DB Benchmarks (Part 1 of 2)

The main goal of this article is to introduce basic knowledge about graph database, graph computing and analytics, and to help readers interpret benchmark testing reports, and to validate if the results are correct and sensible.

Basic Knowledge

There are mainly 2 types of data operations in any graph database:

- Meta-data Operation: Also known as operation against vertex, edge, or their attributes. There are mainly 4 types of meta operations, specifically CURD (Create, Update, Read or Delete).

- High-dimensional Data Operation: By saying “high-dimensional”, we are referring to high dimensional data structures in the resulting datasets, for instance, a group of vertices or edges, a network of paths, a subgraph or some mixed types of sophisticated results out of graph traversal – essentially, heterogeneous types of data can be mixed and assembled in one batch of result therefore making examination of such result both exciting and perplexing.

High-dimensional data manipulation poses as a key difference between graph database and other types of DBMS, we’ll focus on examining this unique feature of graph DB across the entire article.

There are 3 main types of high-dimensional data operations, which are frequently encountered in most benchmark testing reports:

- K-Hop: K-hop query against a certain vertex will yield all vertices that are exactly K-hop away from the source vertex in terms of shortest-path length. K-hop queries have many variations, such as filtering by certain edge direction, vertex or edge attribute. There is a special kind of K-hop query which is to run against all vertices of the entire graph, it is also considered a type of graph algorithm.

- Path: Path queries have many variations, shortest-path queries are most used, with the addition of template-based path queries, circle-finding path queries, automatic-networking queries and auto-spread queries, etc.

- Graph Algorithm: Graph algorithms essentially are the combination of meta-data, K-hop, path queries. There are rudimentary algorithms like degrees, but there are highly sophisticated algorithms like Louvain, and there are extremely complex, in terms of computational complexity, algorithms such as betweeness centrality or full-graph K-hop, particularly when K is much larger than 1.

Most benchmark reports are reluctant to disclose how high-dimensional data operations are implemented, and this vagueness has created lots of trouble for readers to better understand graph technology. We hereby clarify, there are ONLY 3 types of implementations in terms of how graph data is traversed:

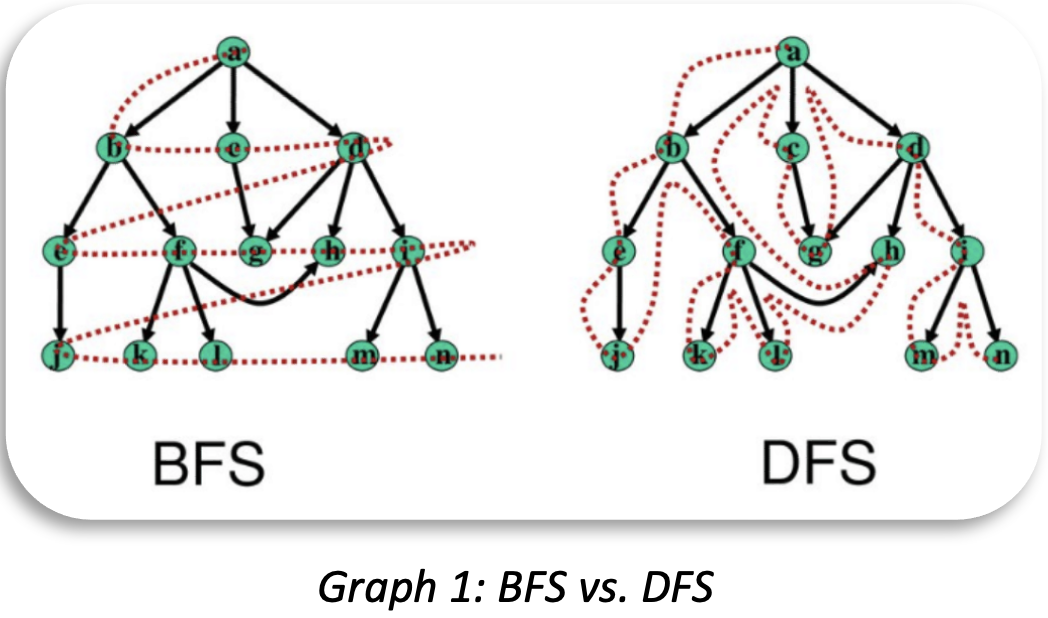

- BFS (Breadth First Search): Shortest Path, K-hop queries are typically implemented using BFS method. It’s worth clarifying that you may use DFS to implement, say, shortest path finding, but in most real-world applications, particularly with large volumes of data, BFS is guaranteed to be more efficient and logically more straightforward than DFS, period.

- DFS (Depth First Search): Queries like circle finding, auto-networking, template-based path queries, random walks desire to be implemented using DFS method. If you find it hard to understand the difference between BFS and DFS, refer to the below diagram and a college textbook on Graph Theory.

- Combination of Both (BFS + DFS): There are scenarios where both BFS and DFS are applied, such as template-based K-hop queries, customized graph algorithms, etc.

Graph 1 illustrates the traversal difference between BFS and DFS. In short, under BFS mode, if qualified 1st hop neighbors are not all visited first, the access to any 2nd hop neighbor will not start, and traversal will continue in this way until all data(neighbors) are visited hop after hop. Based on such description, it’s not hard to tell that if a certain K-hop or shortest-path query only returns a pre-defined limited number of neighbors or paths (say, 1 or 10), it’s guaranteed that the query implementation is wrong! Because you do NOT know the total number of qualified neighbors or paths beforehand.

Typical Benchmark Datasets

There are 3 types of datasets for graph system benchmarking:

- Social or Road Network Dataset: Typical social network datasets like Twitter-2010 and Amazon0601 have been commonly used for graph database benchmarking. There are also road network or web-topology based datasets used in academic benchmarks such as UC Berkeley’s GAP.

- Synthetic Dataset: HPC (High-Performance Computing) organization Graph-500 has published some synthetic datasets for graph system benchmark. International standard organization LDBC (Linked Data Benchmark Council) also use data-generating tools to create synthetic social-network datasets for benchmarking purposes.

- Financial Dataset: The latest addition to the family of graph benchmark datasets is financial dataset, mostly are found in private and business-oriented setup, because the data are often based on real transactions. There are several public datasets such as Alimama’s e-commerce dataset, and LDBC’s FB (FinBench) dataset which is being drafted and to be released toward the end of 2022 (to replace the LDBC’s SNB dataset which does NOT reflect real-world financial business challenges in terms of data topology and complexity).

As graph database and computing technologies and products continue to evolve, it’s not hard to see that more graph datasets will emerge and better reflect real-world scenarios and business challenges.

Twitter-2010 (42 Million vertices, 1.47 Billion edges, sized at 25GB, downloadable from http://an.kaist.ac.kr/traces/WWW2010.html) is commonly used in benchmarking, we’ll use it as a level playing field to explain how to interpret a benchmark report and how to validate results in the report.

Before we start, let’s get ourselves familiar with a few concepts in graph data modeling by using the Twitter dataset as an example:

- Directed Graph: Every edge has a unique direction, in Twitter, an edge is composed of a starting vertex and an ending vertex, which map to the 2 IDs separated by TAB on each line of the data file, and the significance of the edge is that it indicates the starting vertex (person) follows the ending vertex (person). When modeling the edge in a graph database, the edge will be constructed twice, the first time as StartingVertex EndingVertex, and the second time as EndingVertex StartinVertex (a.k.a, the inverted edge), this is to allow traversing the edge from either direction. If the inverted edge is not constructed, queries’ results will be inevitably wrong (We’ll show you how later).

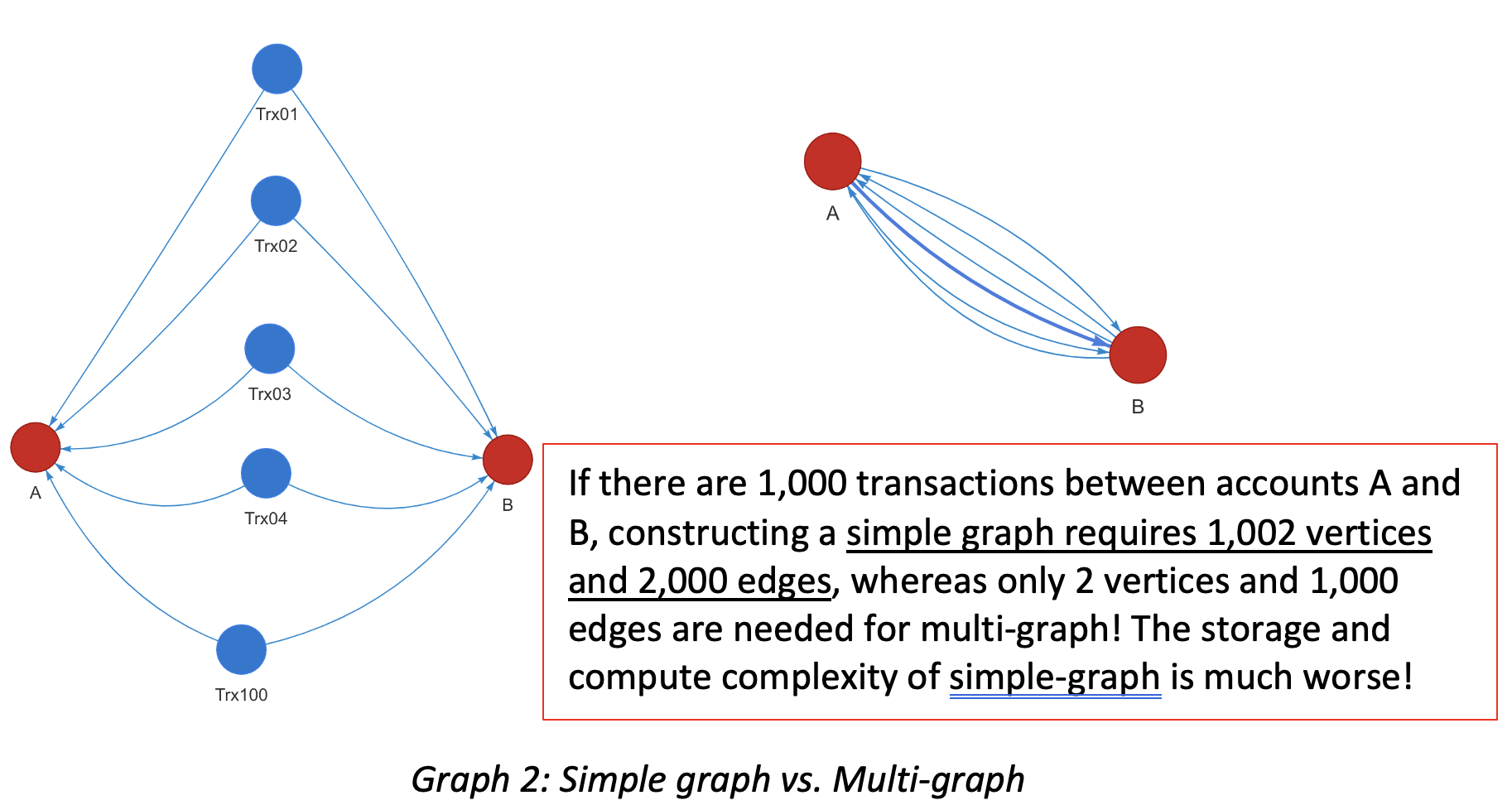

- Simple-graph vs. Multi-graph: If there are more than 2 edges of the same kind between a pair of vertices in either direction, it’s a multi-graph, otherwise, it’s a simple-graph. Twitter and all social network datasets are considered simple graphs because it models a simple following relationship between the users. In financial scenarios, assuming user accounts are vertices and transactions are edges, there could be many transactions in between two accounts, therefore many edges. Some graph databases are designed to be simple-graph instead of multi-graph, this will create lots of problems in terms of data modeling efficiency and query results correctness.

- Node-Edge Attributes:Twitter data does NOT carry any node or edge attribute other than the designated direction of the edge. This is different from transactional graph in financial scenarios, where both node and edge may have many attributes so that filtering, sorting, aggregation, attribution analysis can be done with the help of these attributes. There are so-called graph database systems that do NOT support the filtering by node or edge attributes, which are considered impractical and lack of commercial values.

Report Interpretation

Generally speaking, there are at least 5 parts of a graph database benchmark report:

- Testing Bed/Environment: Software and hardware environment, testing datasets, etc.

- Data Ingestion: Volume data loading, database startup time, etc.

- Query Performance: Queries against meta-data, K-hop, shortest-path, algorithm, etc.

- Real-time Update: Modification to graph data’s topology (meta-data) then query against the updated data to validate results.

- Feature Completeness & Usability: Support of API/SDK for secondary development, GQL, management and monitoring, system maintenance toolkits and disaster recovery, etc.

Most benchmark reports would cover the first 3 parts, but not the 4th or 5th parts – the 4th part reflects if the subject database is more of the OLTP/HTAP type or OLAP focused, with the main difference lying at the capability to process dynamically changing datasets. Clearly, if a graph system claims to be capable of OLTP/HTAP, it must allow data to be updated and queried all the time, instead of functioning as a static data warehouse like Apache Spark, or data projections must be finished first before queries can be run against the just-finished static projection like Neo4j does.

Testing Bed

Almost all graph database products are built on X86 architecture as X86-64 CPU powered servers dominate the server marketplace. The situation has recently changed given the rise of ARM, due to its simplistic RISC (instead of X86’s CISC) therefore greener design. There are growing number of commodity PC servers basing on ARM CPUs nowadays. However, only 2 graph database vendors are known to natively support ARM-64 CPU architecture, they are Ultipa and ArangoDB, while other vendors tend to use virtualization or emulation methods which tend to be dramatically slower than native method.

The core hardware configurations are CPU, DRAM, external storage and network bandwidth. The number of CPU cores are most critical, as more cores mean potentially higher parallelism and better system throughput. Most testing beds settle on 16-core and 256GB configuration, however not all graph systems can leverage multiple cores for accelerated data processing, for instance, even enterprise edition Neo4j leverages up to 4-vCPU in terms of parallelization. If another system can leverage 16-vCPU, the performance gain would be at least 400%.

|

Hardware |

Typical Configuration (For Twitter-2010) |

|

# of Server Instances |

3 Normally in Master-Slave setup |

|

CPU |

Intel Xeon 16-core (32 vCPUs) Alternatively, ARM-64 16-core (32vCPUs) |

|

RAM |

256GB |

|

Hard Disk |

1TB HDD (Cloud Based) or SSD (for better I/O) |

|

Network |

5Gbps |

|

Cloud Service Provider |

Azure, AWS, Google Cloud, Alibaba Cloud, or some private cloud environment |

The table above illustrates typical hardware configuration for graph system benchmarking. It’s worthwhile pointing out that graph database is computing first, unlike RDBMS, Data Warehouse or Data Lake systems which are storage first and compute is 2nd class citizen that’s attached to the storage engine. In another word, traditional DBMS addresses I/O-intensive challenges first, while graph system solves computing-intensive challenges first. While both challenges may intersect with each other, that’s why a performance-oriented graph database should set both computing and storage as its first-class citizens.

The software environment is trivial as all known graph systems settle on Linux OS, and leverage container and virtualization technologies for encapsulation and isolation.

Data Ingestion

Data ingestion has a few indicators to watch out for:

- Dataset volume, topological complexity (or density)

- Ingestion time

- Storage space

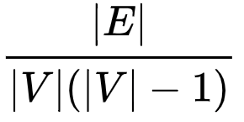

Data volume concerns the total number of vertices and edges, plus complexity (or density), which is normally calculated using the following formula (for directed simple graph):

Where |V| annotates the total number of vertices, and |E| for total number of edges. Per this formula, the highest possible density of a smiple graph is 1. However, as we pointed out earlier, most real-world graphs are NOT simple-graph, but multi-graph, meaning multiple edges may exist between any two vertices, therefore it makes more sense to use vertex-to-edge ratio (|E|/|V|) as a “density” indicator. Taking Twitter-2010 as an example, its ratio is 35.25 (while density is 0.000000846). For any graph dataset with a ratio higher than 10, deep traversal against the dataset poses as great challenge due to exponentially complexity, for instance, 1-hop = 10 neighboring vertices, 2-hop = 100 vertices, 3-hop = 1,000 vertices, and 10-hop = 10,000,000,000.

Ingestion time and storage usage show that how soon can a graph database load the entire dataset, and how much storage space it uses on filesystem. Clearly, for loading time, the shorter the better. Storage space is less of a concern nowadays as storage is super cheap, different database systems may have quite different storage mechanism in terms of replication, sharding, partitioning, normalization and other techniques that may affect computing efficiency and high-availability. The table below shows the data ingestion performance of different graph DBMSes.

|

Raw Data |

Twitter-2010, 42M Nodes, 1.47B Edges, 24.6GB in Size |

||||

|

Graph DB |

Ultipa |

TigerGraph |

Neo4j |

JanusGraph |

ArangoDB |

|

Ingestion Time (seconds) |

2700 |

780 |

3120 |

20800 |

32200 |

|

Relative Time |

1 |

2.98 |

6 |

40 |

61.92 |

|

Storage Space |

30GB |

12GB* |

55GB |

56GB |

128GB |

|

Relative Space |

2.5 |

1 |

4.58 |

4.67 |

10.67 |