Gradient descent stands as a foundational optimization algorithm extensively employed in graph embeddings. Its primary purpose is to iteratively adjust the parameters of a graph embedding model to minimize a predefined loss/cost function.

Several variations of gradient descent have emerged, each designed to address specific challenges associated with large-scale graph embedding tasks. Noteworthy variations include Stochastic Gradient Descent (SGD) and Mini-Batch Gradient Descent (MBGD). These variations update model parameters by leveraging the gradient computed from either a single or a smaller subset of data during each iteration.

Basic Form

Consider a real-life scenario: imagine standing atop a mountain and desiring to descend as swiftly as possible. Naturally, there exists an optimal path downwards, yet identifying this precise route is often an arduous undertaking. More frequently, a step-by-step approach is taken. In essence, with each stride to a new position, the next course of action is determined by calculating the direction (i.e., gradient descent) that allows the steepest descent, enabling movement towards the subsequent point in that direction. This iterative process persists until the foot of the mountain is reached.

Revolving around this concept, gradient descent serves as the technique to pinpoint the minimum value along the gradient's descent. Conversely, if the aim is to locate the maximum value while ascending along the gradient's direction, the approach becomes gradient ascent.

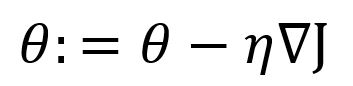

Given a function , the basic form of gradient descent is:

where is the gradient of the function at the position of , is the learning rate. Since gradient is the steepest ascent direction, a minus symbol is used before to get the steepest descent.

Learning rate determines the length of each step along the gradient descent direction towards the target. In the example above, the learning rate can be thought of as the distance covered in each step we take.

The learning rate typically remains constant during the model's training process. However, variations and adaptations of the model might incorporate learning rate scheduling, where the learning rate could potentially be adjusted over the course of training, decreasing gradually or according to predefined schedules. These adjustments are designed to enhance convergence and optimization efficiency.

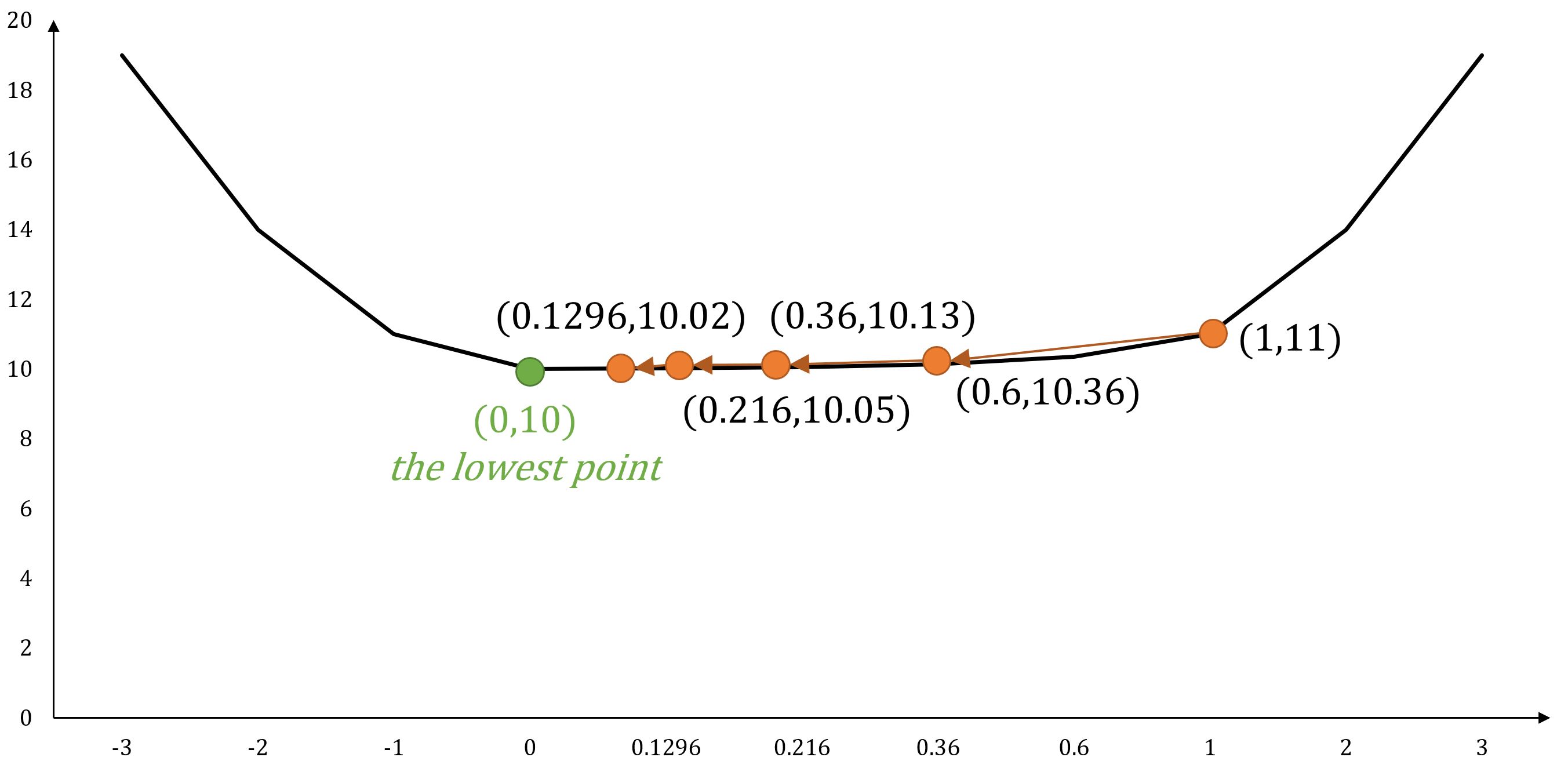

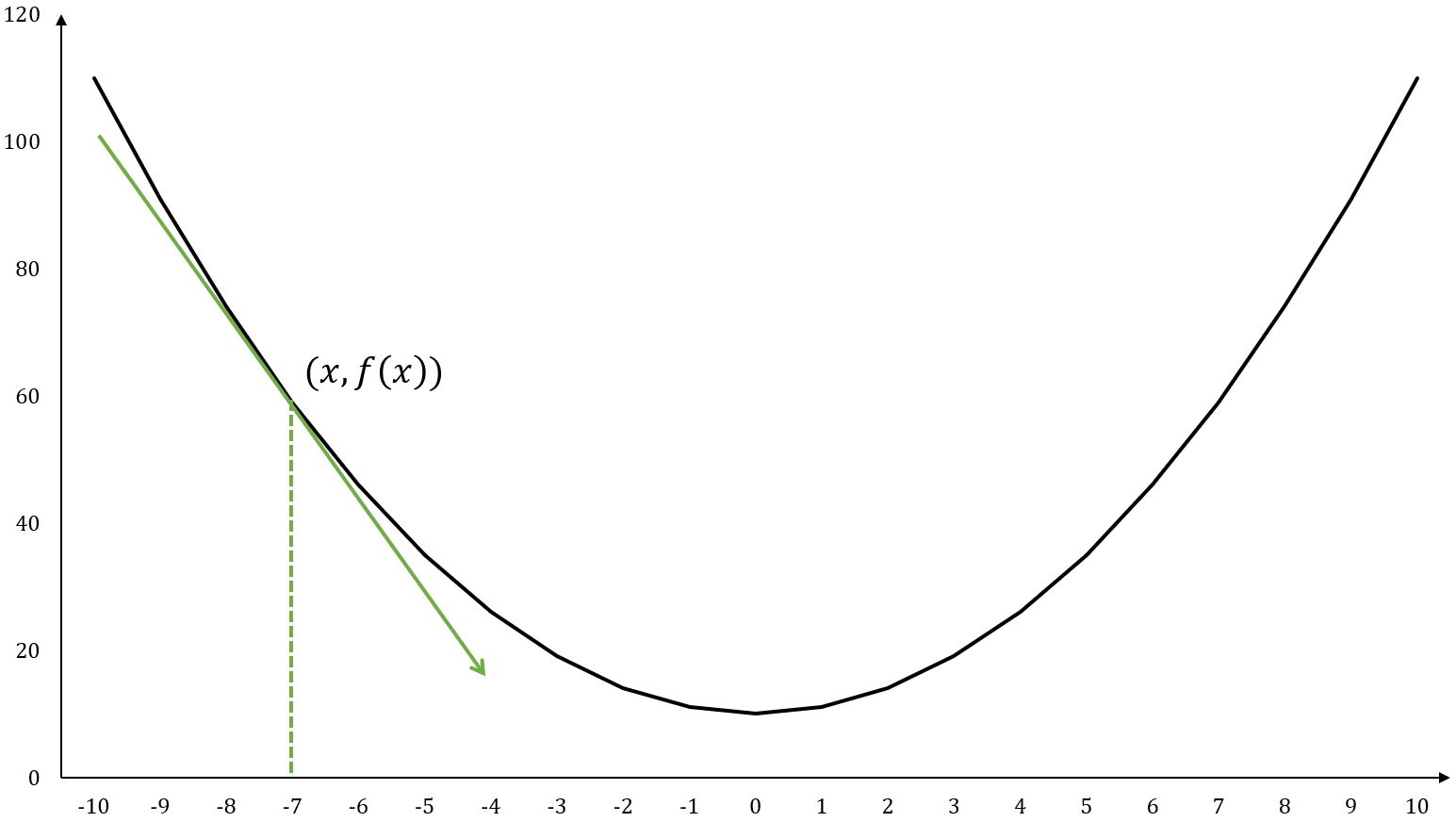

Example: Single-Variable Function

For function , its gradient (in this case, same as the derivative) is .

If starts at position , and set , the next movements following gradient descent would be:

- ...

- ...

As the number of steps increases, we progressively converge towards the position , ultimately reaching the minimum value of the function.

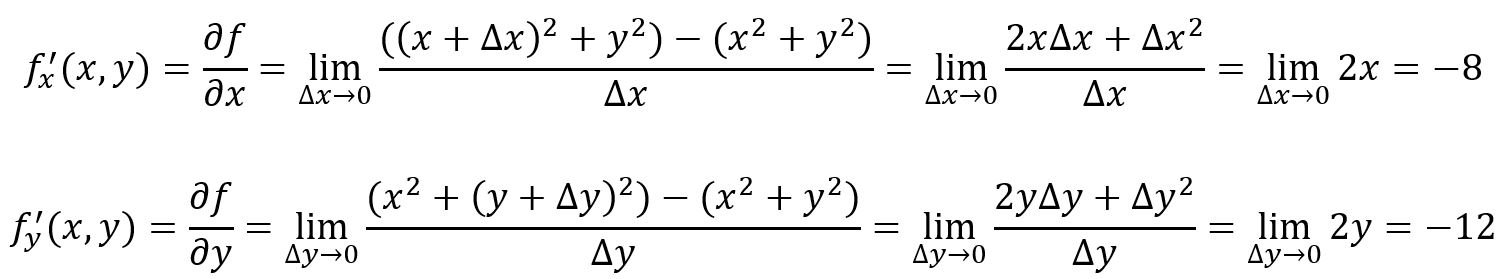

Example: Multi-Variable Function

For function , its gradient is .

If starts at position , and set , the next movements following gradient descent would be:

- ...

- ...

As the number of steps increases, we progressively converge towards the position , ultimately reaching the minimum value of the function.

Application in Graph Embeddings

In the process of training a neural network model in graph embeddings, a loss or cost function, denoted as , is frequently employed to assess the disparity between the model's output and the expected outcome. The technique of gradient descent is then applied to minimize this loss function. This involves iteratively adjusting the model's parameters in the opposite direction of the gradient until convergence, thereby optimizing the model.

To strike a balance between computational efficiency and accuracy, several variants of gradient descent have been employed in practice, including:

- Stochastic Gradient Descent (SGD)

- Mini-Batch Gradient Descent (MBGD)

Example

Consider a scenario where we are utilizing a set of samples to train a neural network model. Each sample consists of input values and their corresponding expected outputs. Let's use and () denote the -th input value and the expected output.

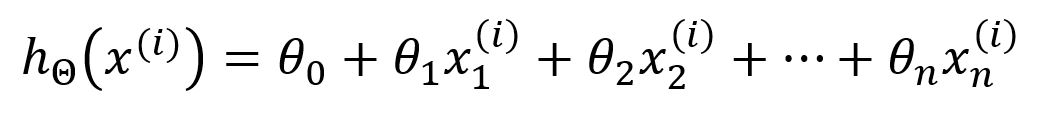

The hypothesis of the model is defined as:

Here, represents the model's parameters ~ , and is the -th input vector, consisting of features. The model uses function to compute the output by performing a weighted combination of the input features.

The objective of model training is to identify the optimal values of that lead to the outputs being as close as possible to the expected values. During the start of training, is assigned random values.

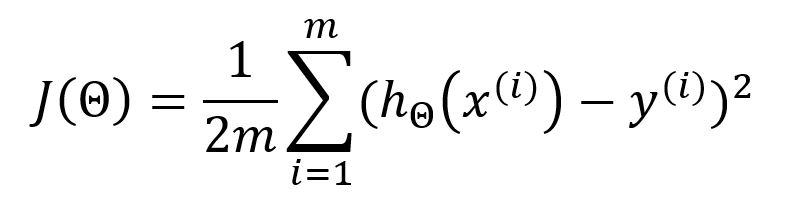

During each iteration of model training, once the outputs for all samples have been computed, the mean square error (MSE) is used as the loss/cost function to measure the average error between each computed output and its corresponding expected value:

In the standard MSE formula, the denominator is usually . However, is often used instead to offset the squared term when the loss function is derived, leading to the elimination of the constant coefficient for the sake of simplifying subsequent calculations. This modification does not affect the final results.

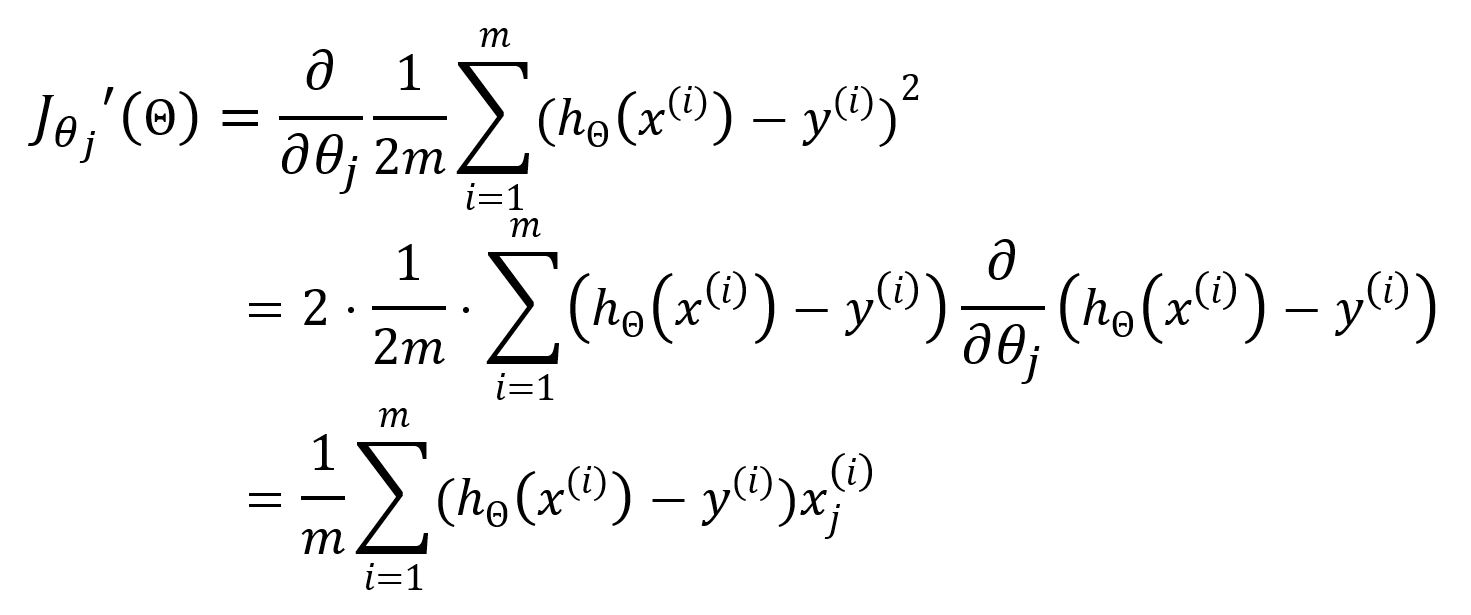

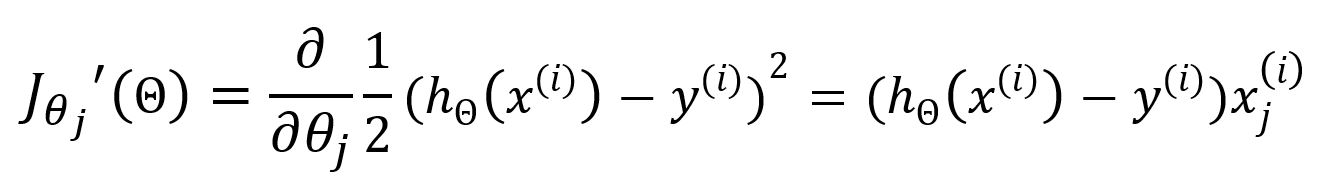

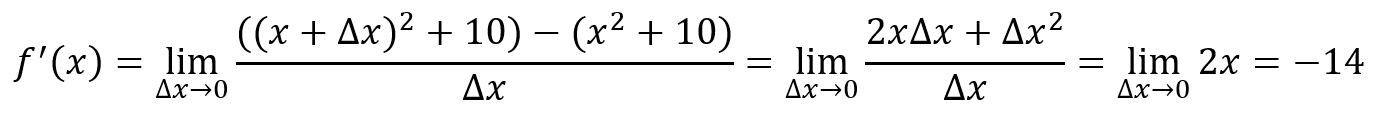

Subsequently, the gradient descent is employed to update the parameters . The partial derivative with respect to is calculated as follows:

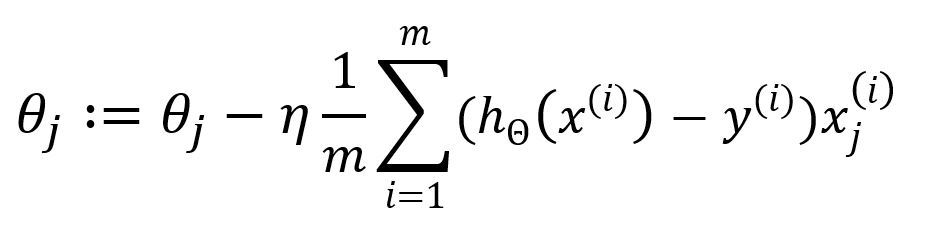

Hence, update as:

The summation from to indicates that all samples are utilized in each iteration to update the parameters. This approach is known as Batch Gradient Descent (BGD), which is the original and most straightforward form of the Gradient Descent optimization. In BGD, the entire sample dataset is used to compute the gradient of the cost function during each iteration.

While BGD can ensure precise convergence to the minimum of the cost function, it can be computationally intensive for large datasets. As a solution, SGD and MBGD were developed to address efficiency and convergence speed. These variations use subsets of the data in each iteration, making the optimization process faster while still seeking to find the optimal parameters.

Stochastic Gradient Descent

Stochastic gradient descent (SGD) only selects one sample in random to calculate the gradient for each iteration.

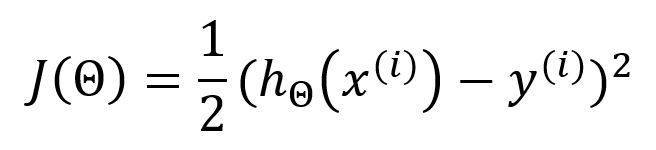

When employing SGD, the above loss function should be expressed as:

The partial derivative with respect to is:

Update as:

The computational complexity is reduced in SGD since it involves the use of only one sample, thereby bypassing the need for summation and averaging. While this enhances computation speed, it comes at the expense of some degree of accuracy.

Mini-Batch Gradient Descent

BGD and SGD both represent extremes - one involving all samples and the other only a single sample. Mini-batch Gradient Descent (MBGD) strikes a balance by randomly selecting a subset of samples for computation.

Mathematical Basics

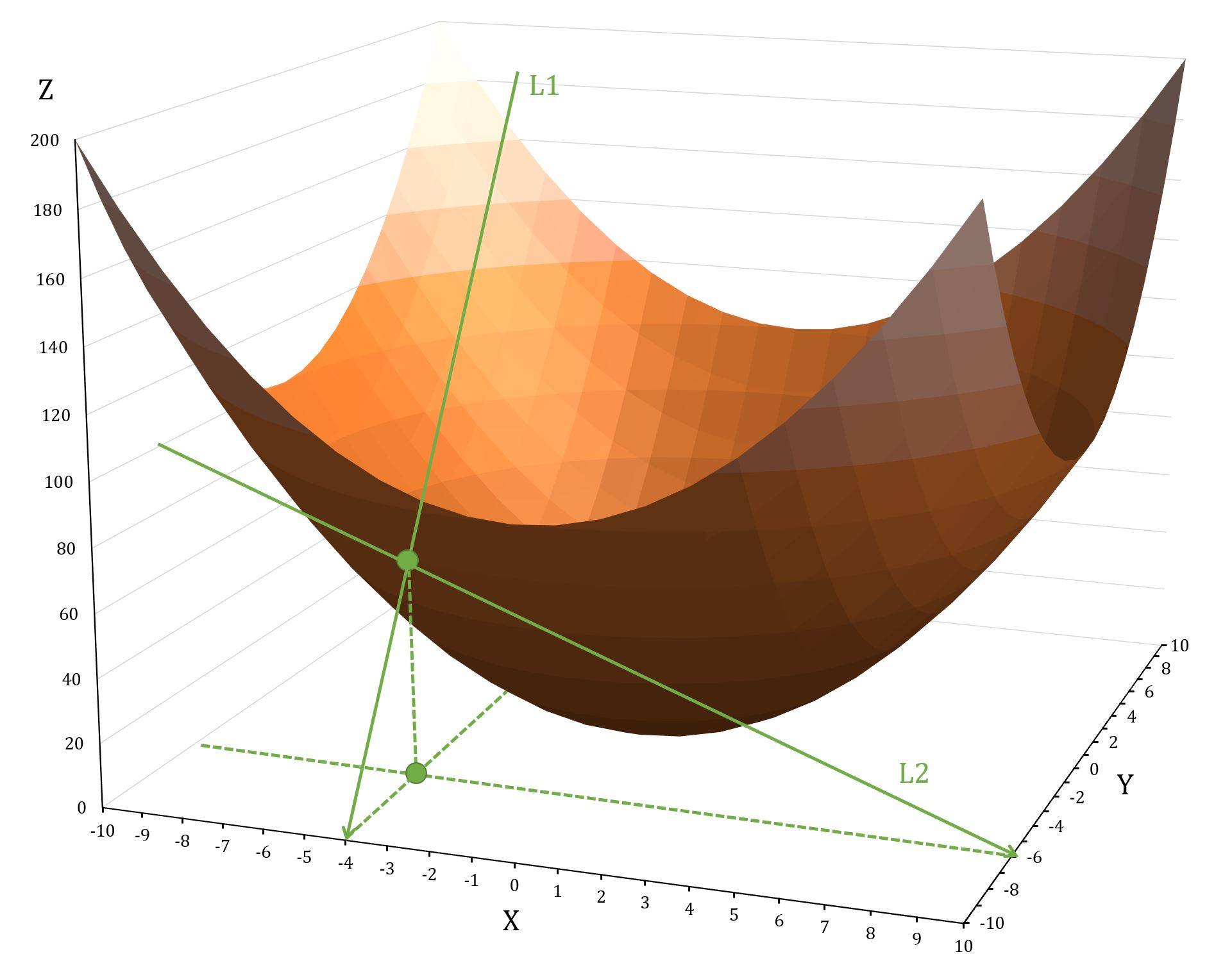

Derivative

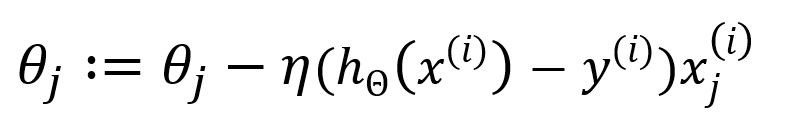

The derivative of a single-variable function is often denoted as or , it represents how changes with respect to a slight change in at a given point.

Graphically, corresponds to the slope of the tangent line to the function's curve. The derivative at point is:

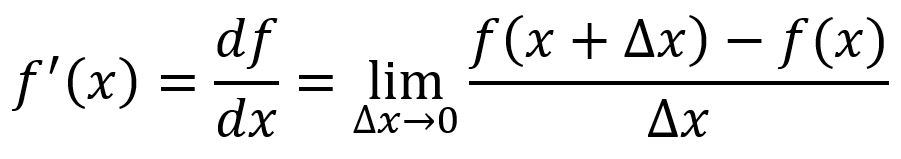

For example, , at point :

The tangent line is a straight line that just touches the function curve at a specific point and shares the same direction as the curve does at that point.

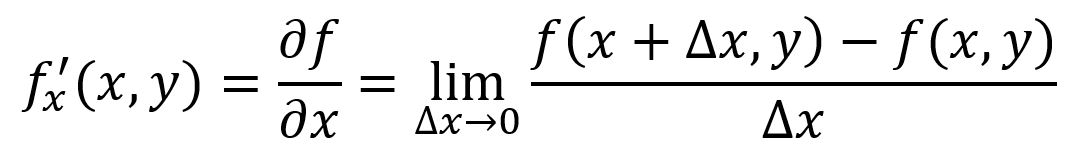

Partial Derivative

The partial derivative of a multiple-variable function measures how the function changes when one specific variable is varied while keeping all other variables constant. For a function , its partial derivative with respect to at a particular point is denoted as or :

For example, , at point , :

shows how the function changes as you move along the Y-axis, while keeping constant; shows how the function changes as you move along the X-axis, while keeping constant.

Directional Derivative

Partial derivative of a function tells about the output changes when moving slightly in the directions of axes. But when we move in a direction that is not parallel to either of the axes, the concept of directional derivative becomes crucial.

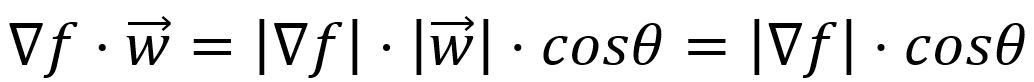

The directional derivative is mathematically expressed as the dot product of the vector composed of all partial derivatives of the function with the unit vector which indicates the direction of the change:

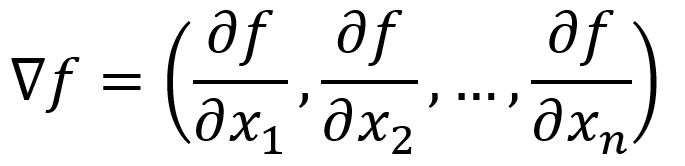

where , is the angle between the two vectors, and

Gradient

The gradient is the direction where the output of a function has the steepest ascent. That is equivalent to finding the maximum directional derivative. This occurs when angle between the vectors and is , as , implying that aligns with the direction of . is thus called the gradient of a function.

Naturally, the negative gradient points in the direction of the steepest descent.

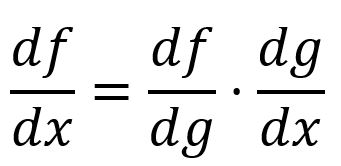

Chain Rule

The chain rule describes how to calculate the derivative of a composite function. In the simpliest form, the derivative of a composite function can be calculated by multiplying the derivative of with respect to by the derivative of with respect to :

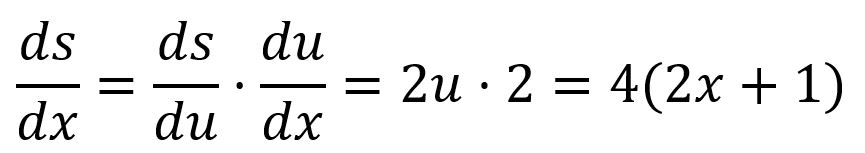

For example, is composed of and :

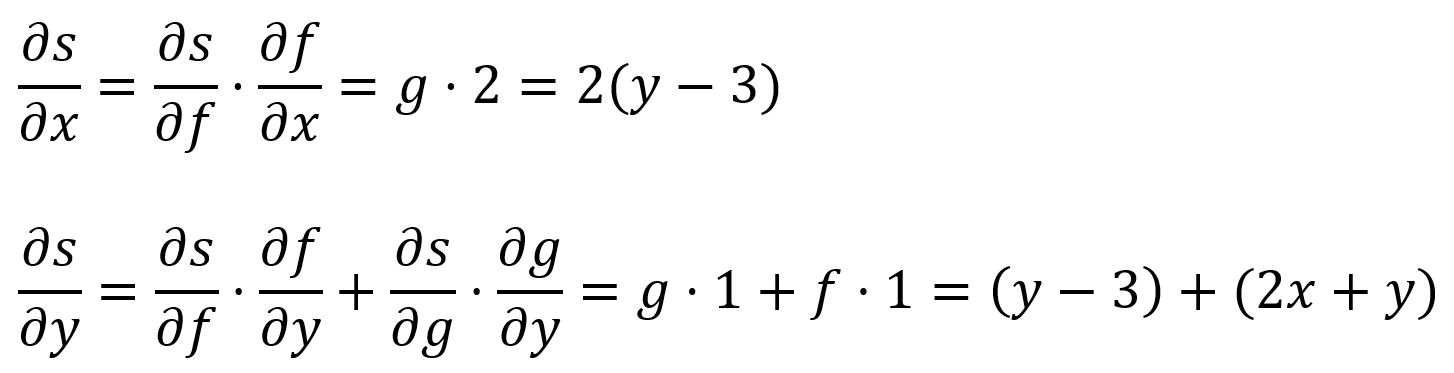

In a multi-variable composite function, the partial derivatives are obtained by applying the chain rule to each variable.

For example, is composed of and and :